Managing and using Deployment Automation in AWS Organizations

Many teams today have fully “hands off” processes for deploying cloud resources and software. A key part of such processes is deployment automation: the technique of using a workflow application to drive various deployment tools - most often Infrastructure-as-Code (IaC) tools.

If that sounds familiar that’s because such tasks are often part of “CI/CD pipelines”, and the top-level workflow applications - like GitHub Actions, GitLab CI/CD, Jenkins, AWS CodePipeline and CodeBuild - are often called “CI/CD tools”.

However CI/CD - Continuous Integration and Continuous Delivery / Deployment - actually covers a lot more than just deployment automation. CI/CD also implies build and test activities, and a strategy of how source code is managed over time (Continuous Integration uses frequent merges of code to a shared branch; Continuous Delivery requires the ability to deploy to production at any time.)

With our operations hats on, full CI and CD are often a lot more than we might need. That’s why in the context of operations - especially the infrequently updated resources of Organization Operations - I like just to talk instead about deployment automation.

There are two main deployment automation concerns for teams using AWS Organizations:

- How to manage access to cloud resources for a deployment automation tool

- How to use deployment automation as part of the management of Organization resources

Welcome to Org Ops Part 5

This is the fifth article in my series on AWS Organization Operations - or Org Ops. This series describes how I recommend you approach managing the most fundamental resources in AWS for small-medium sized companies.

Parts 1 and 2 covered the “why” and “how” of using a multi-Account Organization in AWS. Part 3 covered human user access.

Part 4 described using Infrastructure-as-Code (IaC), and started the story about automation. IaC allows you to define, in code, the resources that you want to exist in your AWS Account. IaC tools make that definition real by working with lower level cloud APIs. However there are a couple of gaps:

- IaC tools don’t (typically) run by themselves - instead they are run from a host environment and need to be provided with your IaC definitions.

- IaC tools usually just deploy one particular set of resources at a time. However a complete deployment process may involve deploying multiple sets of resources and/or software, as well as perform various maintenance tasks, tests, and more.

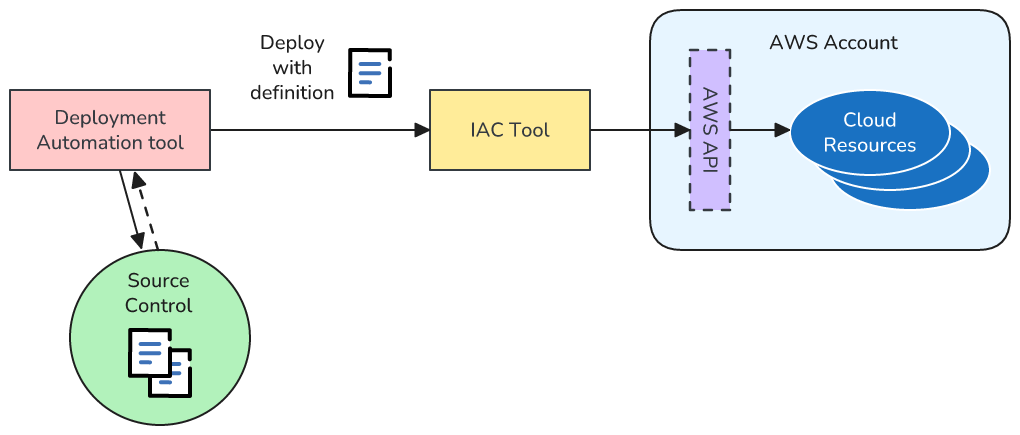

Deployment automation tools fill these gaps by adding another tooling layer on top of your IaC tools. They include the ability to retrieve definition files - usually from source control - and contain their own environments for running commands and scripts. They also usually contain some form of workflow engine to allow driving multiple tasks.

This article describes how you enable your deployment automation tools to integrate with your AWS Accounts, and further, how you can use deployment automation to make looking after your AWS Organization’s resources safer and easier.

Managing AWS Organization access for Deployment Automation tools

Whether you’re using an AWS or non-AWS deployment automation tool then that tool needs to be provided with the right credentials and permissions to actually make the changes you want to happen in your AWS environment.

For example if your automation tool is calling your IaC tool, and your IaC configuration includes S3 buckets and Lambda functions, then your deployment automation tool needs the right access to AWS to manage buckets and Lambda functions.

Like most things security related, managing access involves two separate but related activities - authentication and authorization.

In this article the only AWS access I assume your deployment automation tool chain needs is the ability to call the AWS API. For many scenarios where you’re solely concerned with working with AWS resources this is sufficient. However what I don’t cover here is network access, e.g. if you need to call resources attached to private VPCs in your AWS Accounts. You might need network access if you need to run database migrations, for example, during deployment.

Authenticating external Deployment Automation tools

Authentication is the act of one party proving who it is to another party. In this case we need our deployment tool to prove who it is to AWS. There are three typical scenarios:

-

You’re using an AWS-provided deployment automation tool, like CodeBuild, or CodePipeline. In this case no authentication is required since AWS services are able to trust each other.

-

You’re using a non-AWS deployment tool that supports OpenID Connect (OIDC). I’ll cover how to use this next.

-

You’re using a non-AWS deployment tool that does not support OIDC. Instead you need to create an IAM user, and provide your tooling with the user’s credentials. These days this is strongly discouraged because of the security concerns it introduces, and I won’t discuss it further here.

Authentication using OIDC

OpenID Connect (OIDC) is a cross-vendor authentication protocol. It provides a (relatively!) simple way - for us as users at least - to authenticate systems located on different platforms. No user credentials are required and so the security concerns that come with managing passwords and secret keys are eliminated.

To use OIDC for deployment automation the provider of your automation tool needs to support it. Fortunately this is the case for at least GitHub Actions, GitLab CI, and Jenkins.

The particular way you configure your deployment tool to use OIDC with AWS will change on a tool-by-tool basis, and you’ll need to read your tool’s documentation to know precisely what to do.

As an example, if you’re using GitHub Actions:

- No action is required on the GitHub side - your GitHub user/organization will already have OIDC enabled

- On the AWS side you need to deploy an OIDC provider for GitHub Actions once per Account. You can do this via the web console, CLI, or using IaC tooling. For example here is a CloudFormation snippet for deploying the provider:

GithubOidc:

Type: AWS::IAM::OIDCProvider

Properties:

Url: https://token.actions.githubusercontent.com

ClientIdList: [ sts.amazonaws.com ]

I use GitHub Actions as the example deployment tool in this article, but just to give the basic idea of these techniques. To get the complete picture of how to use GitHub Actions with AWS then read the next article in this series here.

Authorizing external Deployment Automation tools

With authentication covered we can move on to authorization. AWS uses IAM roles to authorize access to resources. IAM roles have two main parts:

- What permissions the role grants

- Who can “assume” the role - i.e. who can use the role when making AWS API calls

Permissions are configured by specifying policies, which can be managed policies, where the permissions have already been defined previously, or inline policies where the specific permissions are defined within the role itself.

As an Org Ops administrator you’ll use both of these techniques over time. Policies can be very broad, e.g. to provide full access to any resource in the Account, or can be extremely specific, like allowing access to individual actions and resources.

As for who can “assume” the role - in our case that’s where we tie-in with either the AWS service identity (in the case of using CodeBuild or CodePipeline) or the OIDC provider (in the case of a non-AWS tool), and I’ll explain more in the next example.

Roles are free and have no authentication aspects, such as passwords, which means that in general it’s a good idea to create different roles for different use cases, allowing you to follow the principle of least privilege. With an Org Ops hat on that means I usually create different roles for different classes of automation workflow, e.g.:

- I create a role per Account for administrative workflows that need elevated privileges to many types of resource

- I create other roles for workflows deploying specific applications that only require limited access to limited resources

IAM roles are cloud resources, and so themselves can be configured using the AWS CLI, web console, or via IaC.

Roles are always deployed to a specific AWS Account and usually only relate to the Account where they are deployed. It’s possible to perform cross-Account activities with roles but as a rule I strongly encourage you, for the sake of simplicity, to use roles scoped solely to a single Account where possible. If you have a workflow where you need to deploy resources to multiple Accounts then I recommend, as an alternative to cross-account roles, that you instead break up the task at the workflow level on a per-Account basis, and have each job / task use the correct role for each Account’s requirements.

Example - configuring a role for a GitHub Actions workflow

Below is a CloudFormation definition for a role that, once deployed, can be used by a GitHub Actions workflow.

GitHubActionsRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Statement:

- Effect: Allow

Action: sts:AssumeRoleWithWebIdentity

Principal:

Federated: arn:aws:iam::YOUR_ACCOUNT_ID_HERE:oidc-provider/token.actions.githubusercontent.com

Condition:

StringLike:

token.actions.githubusercontent.com:sub: 'repo:myGitHubOrg/myRepo:*'

ManagedPolicyArns:

- 'arn:aws:iam::aws:policy/AdministratorAccess'

There are two main parts to this - AssumeRolePolicyDocument and ManagedPolicyArns.

The simple part in this particular case is ManagedPolicyArns - it specifies that anyone assuming this role will be given AdministratorAccess permissions. AdministratorAccess is an AWS managed policy that provides full access to all resources in the account. Often you’ll want to give more specific permissions under ManagedPolicyArns than this though, using an inline policy.

AssumeRolePolicyDocument defines who can use this role. In this case we’re specifying that the OIDC Provider that we deployed earlier can use this role. However it doesn’t stop there, because of the Condition property, and this is where things get important and tricky.

If we don’t specify a Condition then any GitHub Actions workflow - not just our own! - can access our Account with full Administrator access. This would clearly be a bad idea! So we use a Condition to specify which workflows in GitHub Actions are allowed to use this role. In this case I’ve said that “any workflow in the myRepo repository of the myGitHubOrg GitHub Organization” can use the role. Any other workflow that tries to use this role won’t be able to.

It’s crucial to correctly manage the Condition property of all roles that you define for your deployment automation tool if you are using OIDC authentication. For GitHub Actions, the documentation here (and related pages) is required reading. It’s easy to get it wrong, and if you do then you can open yourself up to big security risks. However once you’ve understood how to use it it’s by far the cleanest way to manage access between GitHub Actions and AWS - because you don’t need to maintain and rotate credentials.

Considering how important these roles are, it’s a good idea to have a rigorous process to store and deploy them. That’s precisely what deployment automation is for though.

Automating the deployment of Organization resources

I mentioned at the start that this article was about two things - managing access for deployment automation tools, and using those same tools for Organization resources. I’m shifting gears now to the second of those points.

Why use deployment automation for your Organization?

In Part 4 of this series I described how you can use Infrastructure-as-Code (IaC) techniques to deploy Organization resources to increase speed, reduce effort, and reduce risk. We can, however, go even further with all of these aspects by using deployment automation tooling on top of our Organization IaC.

When using IaC alone we still face the following questions: where do we retrieve our IaC definitions from, when do we deploy changes, what system do we run the IaC tooling on, and how do we configure that system? If we use deployment automation we can fill in the blanks:

- Deployment automation is closely tied to a source control system, allowing us to use the same versioning and review processes for Organization IaC definitions as we do for our software development lifecycle (SDLC)

- We can deploy changes automatically whenever the IaC definitions are updated in a specific repository and branch in source control, or alternatively use a manual action within the workflow tool

- We run the IaC tooling on a well-defined, ephemeral, host / runner within our deployment automation tool. This ensures we’re not using a manual personal user’s environment that could have unexpected contents.

- We use our deployment automation tool’s configuration system to define IAM roles for deployment, etc.

This answers why we want to use deployment automation for our Organizations, and next I’ll describe how.

Hello, source control!

If you’re using a deployment automation tool like CodeBuild, GitHub Actions, or Jenkins, then you’ll likely be using source control to store your infrastructure definitions, just as your developers use it for your application source code.

One question which comes up at this point, with an Org Ops hat on, is - which repository(s) do we use for our Org Ops IaC?

My recommendation, for small companies at least, is that you start by storing all of your Org Ops related IaC templates, etc., in one repository. Over time you might break this out into multiple repos, but typically it’s worth keeping everything in one place to start, since many Organization aspects relate to each other and/or use similar techniques for maintenance.

I’ve created an example AWS Organization called “Superior Widgets” for this article series. I store all the IaC for this in the (public!) GitHub repo here, named aws-org-ops. You can use this repo if you want for inspiration for your own Org Ops work!

Bootstrapping and maintaining OIDC and Admin access

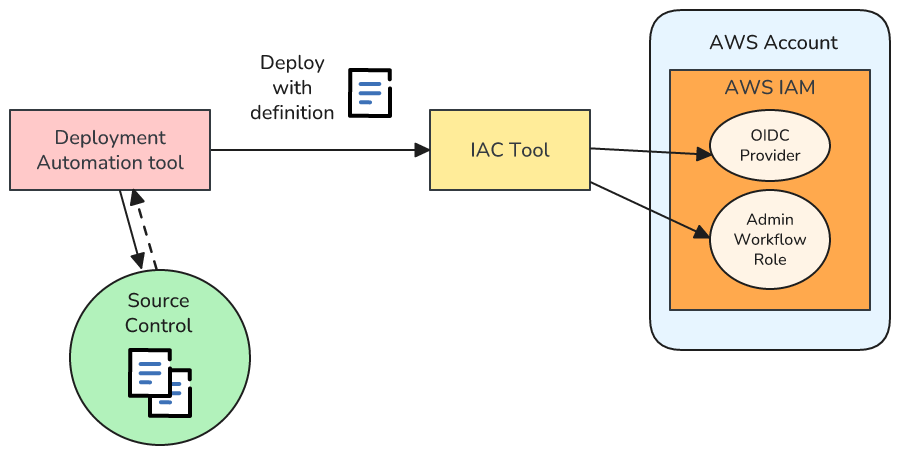

To use deployment automation with our AWS Organization we need to configure and maintain two core elements in each Account in the Organization:

- The OIDC provider, if we’re using an AWS-external deployment automation tool that supports OIDC

- A role that can be used by administration deployment automation workflows

In order to do so in a safe and repeatable manner we can do this as follows:

- Create a definition for the OIDC provider and role with our IaC tool. For example if using CloudFormation we can create a template using resources like the

AWS::IAM::OIDCProviderandAWS::IAM::RoleI’ve already shown in this article - Save this definition in our Org Ops source control repository

- Define a workflow in our deployment automation tool that will read the definition from Source Control, and then invoke our IaC tool

This looks like this:

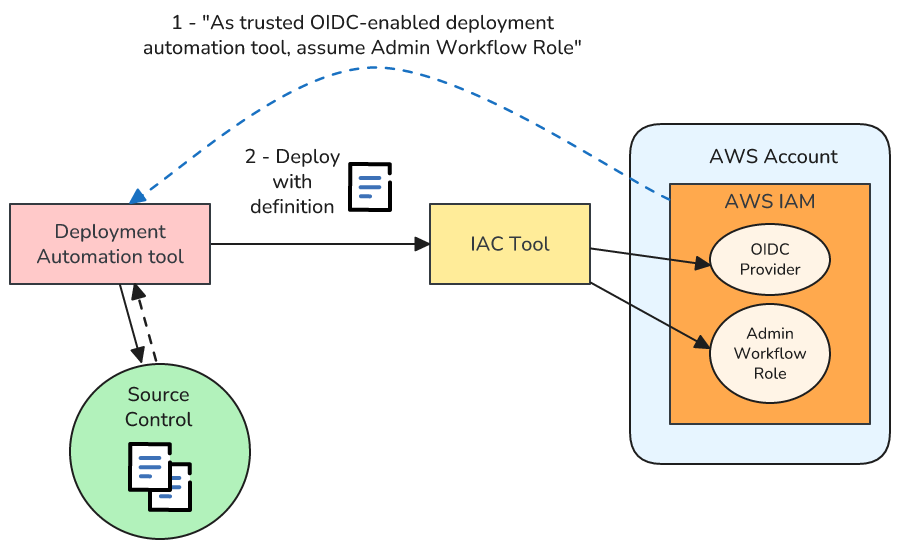

However there’s a small problem, at least to start. To be able to deploy resources to AWS, our deployment automation tool first needs to authenticate with AWS and assume a role … but to do so it requires the very same resources that this workflow will deploy.

Several times in this series I’ve mentioned that Org Ops tasks often end up being a little self-referential and usually have bootstrap issues. For example, what user do you use to configure user management? Deployment automation is no exception! Yet again, the Org Ops snake eats its own tail.

In this case, therefore, it’s necessary to manually deploy the administration resources the first time they’re deployed. After that the workflow can assume and update its own resources.

I mentioned above that we need to perform this work for each Account in our Organization. I find this is a good use case for the StackSet technique I introduced in Part 4, and I give a full example of doing so in Part 6.

Managing workflow access for application repositories

Once we have OIDC access for administration workflows we can start building out access for other deployment automation. One example is access for application workflows - i.e. deployment automation workflows used in the context of application source repositories, for deploying infrastructure and code specific to an application. I mentioned earlier that because IAM roles are free it often makes sense to use different roles for different use cases, and this is such an example.

Even though I’m talking about application-scope workflows, I recommend that you manage the roles that these workflows use as an Org Ops responsibility. That’s because of the concerns I raised earlier with needing to pay close attention to the specific conditions within the role definitions.

Managing the application workflow roles is very similar to managing the admin workflow roles, but this time we don’t need to deploy an OIDC provider since we only need to do that once per AWS account, and we already did so in the previous step.

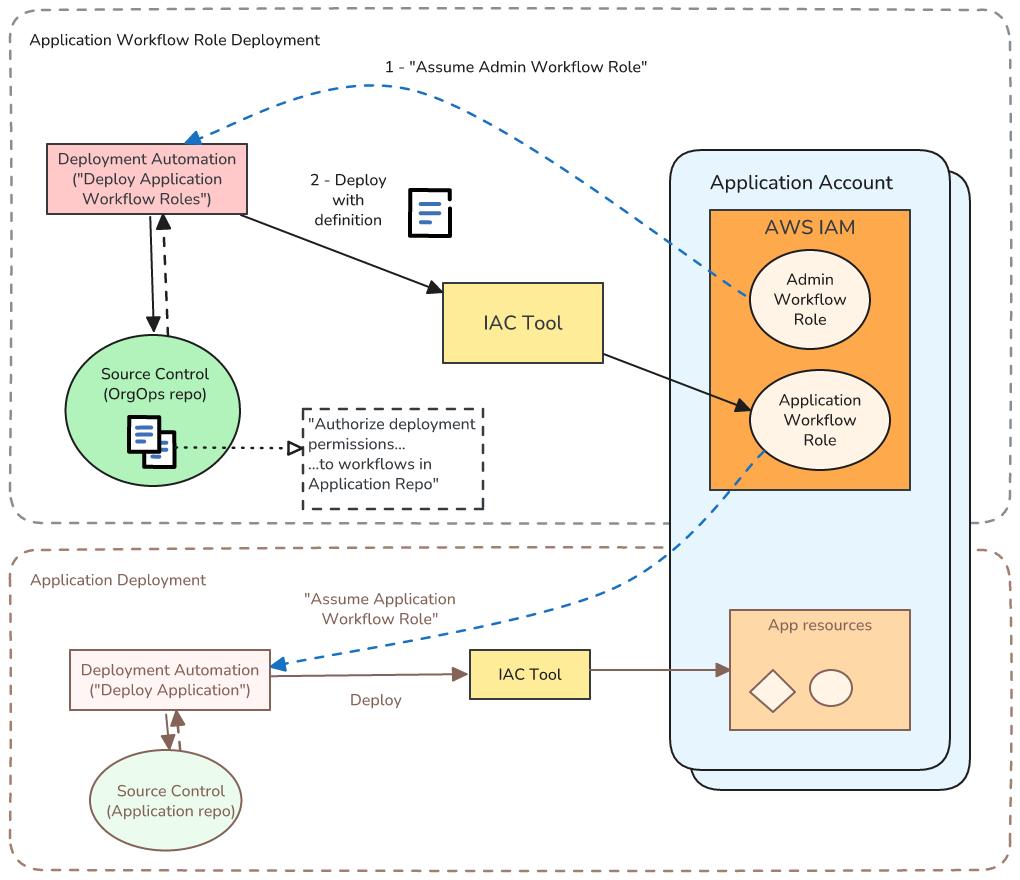

Deploying these roles can get a little confusing because we’re using one workflow to deploy permissions for a different workflow. Here’s what it looks like

What we care about now is the workflow at the top of the diagram - in the section Application Workflow Role Deployment. A few points to highlight:

- Even though we are authorizing access for workflows in the application repo I store the definition of this role in the Org Ops repo. I do so because the workflow to deploy roles needs administration access to the application accounts. Also I like to keep all of my OIDC roles in one place because they are such a big security concern.

- This workflow assumes the Admin Workflow Role (deployed in the previous section) to be able to deploy the Application Workflow Role.

- Often we will have multiple AWS Accounts per Application workload, e.g. for production vs. development. Therefore the deployment automation workflow will need to repeat the deployment process per Account. In this case - instead of StackSets - I usually use the “fan out” approach I introduced in Part 4. I do so because there are often small differences between each Account’s role.

Once this workflow is successful we can create workflows associated with our application repo, and those workflows will only have scope to deploy to the application accounts, with the permissions we specified in the role definition. These workflows are represented by the lower Application Deployment part of the diagram.

I give a full example of this scenario in Part 6.

Deploying other types of Organization resources

Now that we can deploy the roles that workflows use, and have examples of workflows using those roles, we can automate the deployment of pretty much any Organization resource that can be deployed using Infrastructure-as-Code.

In the example repository for this article series I give several examples - take a look in the workflows directory here. I also walk through various examples in detail, for GitHub Actions, in the next part of this series.

Summary and Next Steps

This article introduced you to using deployment automation, also known CI/CD, with your AWS Organization. It covered both how to enable AWS to be driven by your deployment automation, and also how to use deployment automation as part of the management of your Organization.

I expand on this article in the next part of this series - “AWS Organization Deployment Automation with GitHub Actions” - which takes the ideas I’ve introduced here and expands them to full, working, examples.

A couple of areas I did not cover here were testing and monitoring of your deployment workflows. Those activities are both useful, but aren’t particularly different for Org Ops vs. other uses of CI/CD tooling.

Feedback + Questions

If you have any feedback or questions then feel free to email me at mike@symphonia.io, or contact me at @mikebroberts@hachyderm.io on Mastodon, or at @mikebroberts.com on BlueSky.